Containerization packages applications and their dependencies in isolated containers. Kubernetes automates deploying, scaling, and managing these containers.

Containerization revolutionizes software development by enabling consistent environments across multiple platforms. This approach ensures applications run reliably regardless of where they are deployed. Kubernetes, an open-source platform, orchestrates these containers, streamlining operations through automation. It manages clusters of containers, optimizing resource utilization and scaling applications seamlessly.

These technologies together enhance agility, improve efficiency, and reduce deployment times. Businesses can achieve faster development cycles and more resilient applications. Containerization and Kubernetes have become essential for modern DevOps practices, supporting microservices architectures and facilitating continuous integration and delivery. Embracing these tools can significantly elevate your software development and deployment strategy.

Credit: sensu.io

Introduction To Containerization

Containerization has revolutionized software development. It offers a way to run applications in isolated environments. This ensures consistency across different stages of development.

What Is Containerization?

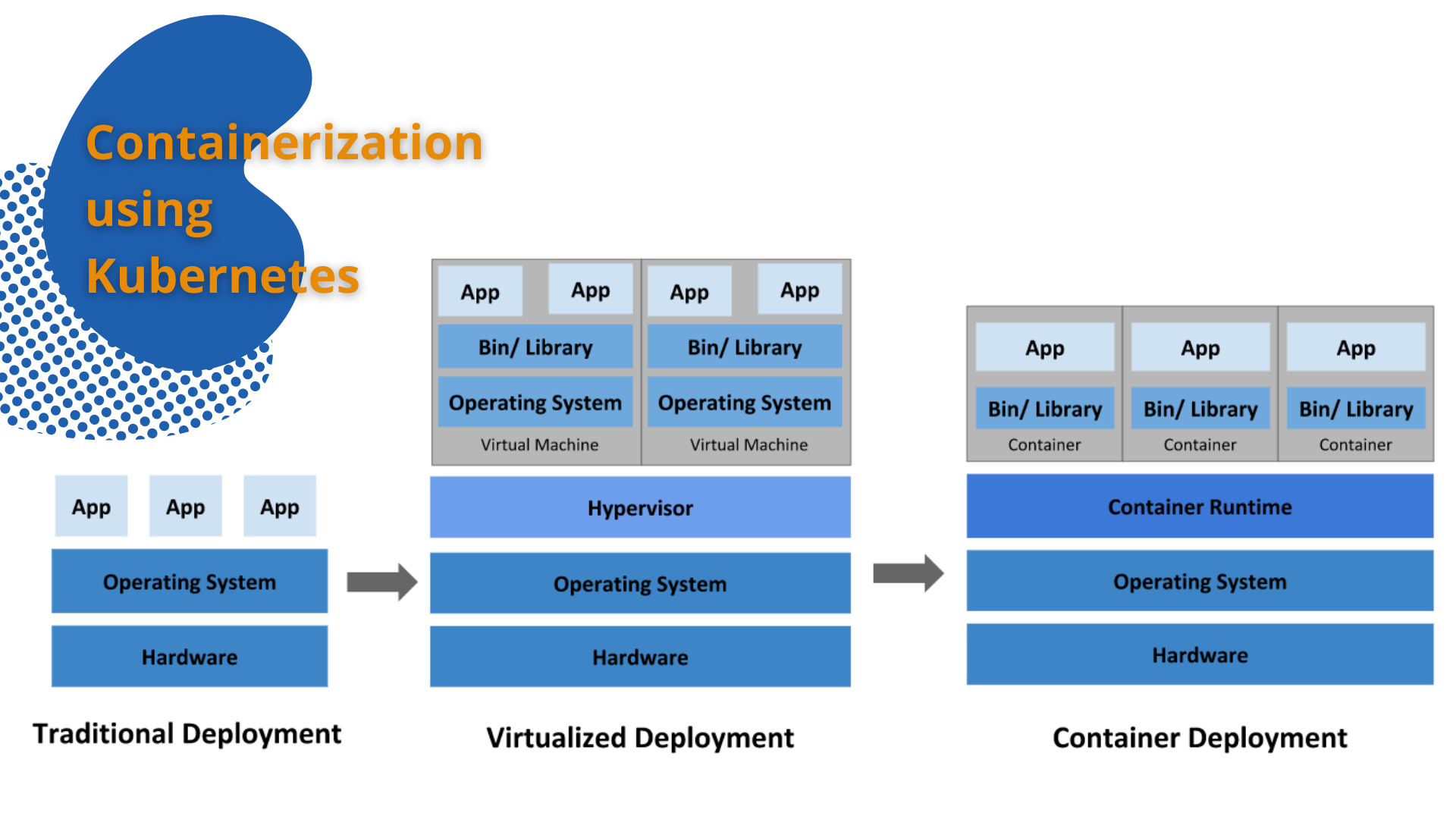

Containerization packages an application and its dependencies. It creates a single, lightweight executable. This executable can run consistently on any environment.

Containers are different from virtual machines. Containers share the host system’s OS kernel. This makes them more efficient and lightweight.

Benefits Of Containerization

Containerization offers several key benefits for developers and organizations:

- Portability: Containers run the same way in any environment.

- Scalability: Easily scale applications up or down.

- Resource Efficiency: Containers use fewer resources than virtual machines.

- Isolation: Each container runs in its own environment, reducing conflicts.

- Consistency: Containers ensure the application behaves the same everywhere.

| Benefit | Description |

|---|---|

| Portability | Run containers consistently across different environments. |

| Scalability | Scale applications easily and efficiently. |

| Resource Efficiency | Containers use fewer resources than virtual machines. |

| Isolation | Containers run in their own isolated environments. |

| Consistency | Ensure applications behave the same in any environment. |

Key Components Of Containerization

Containerization has revolutionized how applications are deployed and managed. It offers a lightweight, efficient way to run software across different environments. Understanding the key components of containerization is essential for leveraging its full potential.

Containers Vs Virtual Machines

Containers and Virtual Machines (VMs) both enable isolated environments. But they are different in structure and performance. Here’s a comparison:

| Feature | Containers | Virtual Machines |

|---|---|---|

| Isolation | Process-level | Hardware-level |

| Startup Time | Milliseconds | Minutes |

| Resource Usage | Efficient | Heavy |

| Size | MBs | GBs |

Popular Container Tools

There are several tools available for containerization. Each tool offers unique features and benefits. Here are some of the most popular ones:

- Docker: The most well-known container platform. It simplifies the creation and management of containers.

- Podman: A daemonless tool for managing containers. It provides better security by running in rootless mode.

- Containerd: An industry-standard core container runtime. It is used in Docker and Kubernetes.

- rkt: Focuses on security and composability. It is a pod-native container engine.

- LXC: An older but still relevant tool. It offers a complete Linux environment in a container.

These tools make it easier to manage and deploy applications. They provide the necessary infrastructure to support containerization.

Understanding Kubernetes

Kubernetes is a powerful tool for managing containerized applications. It helps automate deployment, scaling, and operations of application containers. This section will explain Kubernetes in simple terms.

What Is Kubernetes?

Kubernetes is an open-source platform. It orchestrates containerized applications. Google initially developed it. Now, the Cloud Native Computing Foundation maintains it.

Kubernetes helps manage workloads. It uses containers like Docker. With Kubernetes, you can automate tasks. Tasks like deployment and scaling become easier.

Core Features Of Kubernetes

Kubernetes offers several features. These features make it powerful and flexible. Below are some core features:

- Automated Scheduling: Kubernetes schedules containers. It finds the best server to run them.

- Self-Healing: Kubernetes can restart failed containers. It replaces and reschedules them.

- Horizontal Scaling: You can scale your applications easily. Add or remove containers based on needs.

- Service Discovery: Kubernetes helps in discovering services. No need for external tools.

- Storage Orchestration: Kubernetes mounts storage systems. It supports local and cloud storage.

| Feature | Description |

|---|---|

| Automated Scheduling | Finds the best server to run containers. |

| Self-Healing | Restarts and replaces failed containers. |

| Horizontal Scaling | Scales applications by adding or removing containers. |

| Service Discovery | Discovers services without external tools. |

| Storage Orchestration | Mounts various storage systems. |

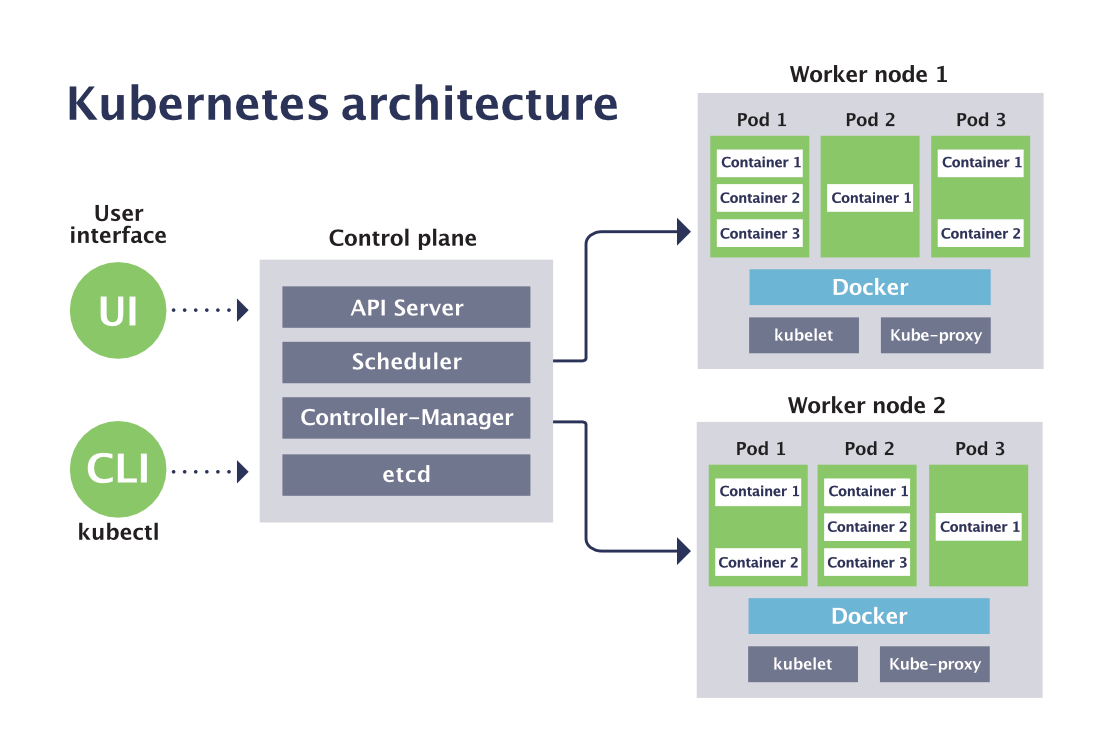

Kubernetes Architecture

Kubernetes is a powerful system for managing containerized applications. Its architecture is designed for scalability, resilience, and ease of use. Understanding Kubernetes architecture helps in efficiently running applications in production.

Master Components

The master components are the brain of Kubernetes. They handle all the major decisions about the cluster.

- API Server: The API server is the front end of the Kubernetes control plane. It exposes the Kubernetes API.

- etcd: This is a consistent and highly-available key-value store used for all cluster data.

- Scheduler: The scheduler watches for newly created pods with no assigned node and selects a node for them to run on.

- Controller Manager: This includes various controllers that regulate the state of the system.

Node Components

Node components run on every node, maintaining running pods and providing the Kubernetes runtime environment.

- Kubelet: An agent that ensures containers are running in a pod.

- Kube-proxy: A network proxy that maintains network rules on nodes. It allows communication to pods from inside or outside the cluster.

- Container Runtime: The software responsible for running containers. Docker is a popular choice, but Kubernetes supports other runtimes.

Container Orchestration

Container orchestration is the automated management of containerized applications. It involves coordinating and managing containers to ensure smooth application deployment. This section delves into the importance of orchestration and why Kubernetes is a leading tool.

Importance Of Orchestration

Orchestration handles the complex tasks of managing containers. It ensures containers run efficiently across different environments. Key benefits include:

- Scalability: Automatically scale applications based on demand.

- High Availability: Maintain application uptime with automated failovers.

- Resource Optimization: Efficiently use hardware resources.

- Load Balancing: Distribute traffic evenly across containers.

Kubernetes As An Orchestration Tool

Kubernetes is a powerful tool for container orchestration. It was developed by Google and is now maintained by the CNCF. Key features of Kubernetes include:

- Automatic Binpacking: Efficiently place containers based on resource requirements.

- Self-Healing: Restart failed containers and replace them automatically.

- Horizontal Scaling: Scale applications up and down with a simple command.

- Service Discovery: Automatically assign IP addresses and a DNS name for each set of containers.

These features make Kubernetes ideal for managing complex applications in production.

Deploying Applications With Kubernetes

Deploying applications can be a complex task. Kubernetes simplifies this process. This tool helps manage containerized applications. It makes scaling and deploying easy.

Setting Up A Kubernetes Cluster

To start, you need a Kubernetes cluster. This cluster consists of master and worker nodes.

- Master node: Manages the cluster.

- Worker nodes: Run the applications.

Install Kubernetes on your system. Use tools like Minikube for local setups. For cloud environments, use services like Google Kubernetes Engine (GKE) or Amazon EKS.

Once installed, configure the cluster using kubectl. This command-line tool interacts with the cluster.

To check the nodes, use the command:

kubectl get nodesThis command lists all nodes in the cluster. Ensure all nodes are in a “Ready” state.

Deploying A Simple Application

Deploying an application on Kubernetes involves creating a deployment file. This file is written in YAML format.

Here is a sample YAML file for deploying a simple Nginx application:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

Save this file as nginx-deployment.yaml. Use the following command to deploy:

kubectl apply -f nginx-deployment.yamlThis command creates a deployment with three replicas of the Nginx container.

To check the status of the deployment, use:

kubectl get deploymentsThis command shows the current state of your deployment. Ensure the status is “Running”.

Access the application using a service. Create a service YAML file:

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

Save this file as nginx-service.yaml. Apply it using:

kubectl apply -f nginx-service.yamlThis command exposes the Nginx application via a LoadBalancer. Access it using the external IP provided by the LoadBalancer.

Advanced Kubernetes Features

Advanced Kubernetes features help developers manage and optimize their applications. These features make Kubernetes a powerful tool for containerized environments. Two key advanced features are scaling applications and self-healing mechanisms.

Scaling Applications

Kubernetes makes scaling applications easy and automatic. This helps maintain performance during traffic spikes.

- Horizontal Pod Autoscaler (HPA): Adjusts the number of pods based on CPU or custom metrics.

- Vertical Pod Autoscaler (VPA): Adjusts the resource limits and requests of individual pods.

- Cluster Autoscaler: Adds or removes nodes in a cluster based on resource needs.

These tools ensure applications always have enough resources. They help handle varying loads efficiently.

Self-healing Mechanisms

Kubernetes can automatically fix problems in your applications. This feature keeps your applications running smoothly.

- Pod Health Checks: Kubernetes uses liveness and readiness probes to monitor pod health.

- Automatic Restarts: If a pod fails a health check, Kubernetes restarts it.

- ReplicaSets: Ensure a specified number of pod replicas are always running.

Self-healing mechanisms reduce downtime and manual intervention. They make applications more reliable.

Here’s a summary table of these advanced features:

| Feature | Description |

|---|---|

| Horizontal Pod Autoscaler (HPA) | Adjusts pod count based on CPU or custom metrics |

| Vertical Pod Autoscaler (VPA) | Adjusts resource limits and requests of pods |

| Cluster Autoscaler | Adds or removes nodes based on resource needs |

| Pod Health Checks | Uses probes to monitor pod health |

| Automatic Restarts | Restarts pods that fail health checks |

| ReplicaSets | Ensures a specified number of pod replicas are running |

Impact On Devops Practices

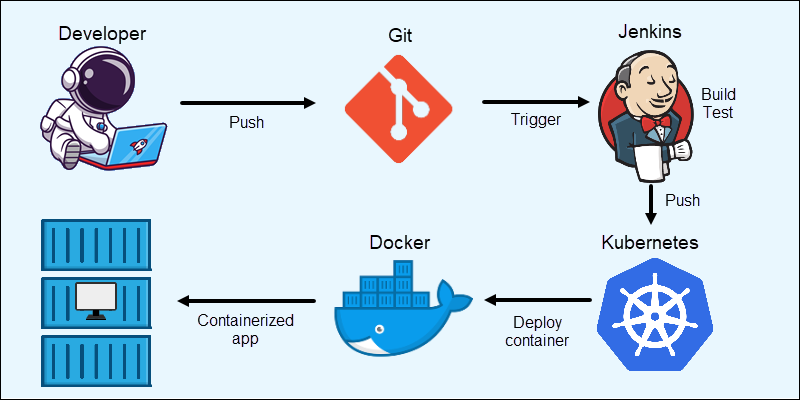

Containerization and Kubernetes have drastically transformed DevOps practices. They enable faster development, better collaboration, and seamless deployment. The integration of these technologies into DevOps pipelines has led to significant improvements.

Improving Ci/cd Pipelines

Containers streamline the Continuous Integration/Continuous Deployment (CI/CD) process. They provide a consistent environment across all stages. Developers can build, test, and deploy applications more efficiently.

- Consistency: Containers ensure the same environment for development, testing, and production.

- Isolation: Each application runs in its own container, reducing conflicts.

- Speed: Containers are lightweight and can be quickly spun up or down.

Kubernetes adds another layer of efficiency. It automates the deployment, scaling, and management of containerized applications. This makes the CI/CD pipelines more robust and scalable.

| Feature | Benefit |

|---|---|

| Automated Deployment | Reduces manual errors and speeds up releases |

| Scalability | Handles increased loads automatically |

| Self-Healing | Automatically restarts failed containers |

Enhancing Collaboration

Containerization and Kubernetes enhance collaboration among teams. They standardize the development environment, making it easier for developers, testers, and operations teams to work together.

Developers benefit from a consistent environment that mirrors production. This reduces the “it works on my machine” problem.

Testers can deploy containers that replicate production settings, ensuring accurate test results.

Operations teams find it easier to manage and monitor applications. Kubernetes provides tools for logging, monitoring, and alerting.

- Standardized Environments

- Streamlined Workflows

- Improved Communication

Overall, containerization and Kubernetes foster a culture of collaboration. They bring together different teams, making DevOps practices more efficient and effective.

Challenges And Solutions

Containerization and Kubernetes offer great benefits. But, they come with challenges. Learn about common challenges and best practices to solve them.

Common Challenges

- Complexity: Kubernetes has a steep learning curve.

- Security: Ensuring security at every layer is tough.

- Resource Management: Optimizing resources can be tricky.

- Networking: Networking within clusters can be confusing.

- Monitoring: Keeping track of containers is challenging.

Best Practices For Overcoming Challenges

Complexity

Break down Kubernetes concepts into small parts. Use tutorials and online courses.

Security

- Use Role-Based Access Control (RBAC) for permissions.

- Regularly scan for vulnerabilities.

- Use network policies to control traffic.

Resource Management

Use auto-scaling features. Monitor resource usage with tools like Prometheus.

Networking

Understand network policies. Use tools like Calico for network control.

Monitoring

Use logging and monitoring tools. Tools like Grafana and ELK Stack are helpful.

Credit: kubernetes.io

Future Of Containerization And Kubernetes

The future of Containerization and Kubernetes looks bright. This technology is growing fast. Many industries now use it. Let’s explore the emerging trends and long-term benefits.

Emerging Trends

New trends in containerization are on the rise. Here are some key points:

- Serverless Containers: More companies use serverless containers. They help save costs.

- Edge Computing: Containers at the edge reduce latency. This helps in real-time data processing.

- Security Enhancements: Security is a big focus. Tools for container security are improving.

- AI and ML Integration: AI and ML workloads are now containerized. This makes deployments easier.

Long-term Benefits

Containerization and Kubernetes offer several long-term benefits.

| Benefit | Description |

|---|---|

| Scalability | Containers can scale quickly. This meets growing demands. |

| Portability | Containers run anywhere. They ensure consistent environments. |

| Efficiency | Resources are used better. This saves costs. |

| Automation | Kubernetes automates tasks. This reduces manual work. |

Companies that adopt these technologies will stay ahead. They will enjoy improved performance and cost savings.

Credit: rvglobalsolutions.com

Frequently Asked Questions

What Is Containerization And Kubernetes?

Containerization packages software and dependencies into isolated units called containers. Kubernetes automates deployment, scaling, and management of containerized applications.

What Is The Difference Between Containers And Kubernetes?

Containers package applications with their dependencies, ensuring consistency across environments. Kubernetes manages and orchestrates these containers, automating deployment, scaling, and operations.

What Is The Difference Between Kubernetes And Deployment?

Kubernetes is an open-source platform for managing containerized applications. Deployment is a process within Kubernetes that manages the release of these applications.

What Is The Difference Between Kubernetes And Pod?

Kubernetes is an open-source platform for managing containerized applications. A pod is the smallest deployable unit in Kubernetes, consisting of one or more containers.

Conclusion

Containerization and Kubernetes offer powerful solutions for modern application deployment. They enhance scalability, efficiency, and flexibility. By adopting these technologies, businesses can streamline operations and reduce costs. Stay ahead in the competitive landscape by leveraging containerization and Kubernetes. Embrace these tools to future-proof your IT infrastructure and achieve greater success.