Reinforcement Learning (RL) is a machine learning technique where agents learn to make decisions by interacting with an environment. Agents aim to maximize cumulative rewards through trial and error.

Reinforcement Learning mimics how humans learn from experiences. It involves agents taking actions to achieve goals, guided by rewards and penalties. RL is widely used in robotics, gaming, and autonomous systems. It helps in optimizing complex decision-making processes. Algorithms like Q-learning and Deep Q-Networks are popular RL methods.

Businesses use RL for personalized recommendations and dynamic pricing. RL’s potential in artificial intelligence continues to grow, making it a crucial area in machine learning research. Understanding RL can lead to more efficient and intelligent systems.

Credit: www.turing.com

Introduction To Reinforcement Learning

Reinforcement Learning (RL) is a type of machine learning. It teaches machines to make decisions. Machines learn by interacting with their environment. They get rewards or penalties based on their actions. The goal is to maximize the total reward.

The Concept Of Learning Through Interaction

In RL, learning happens through trial and error. The machine, called an agent, explores its environment. It takes actions and observes outcomes. The agent learns which actions yield the most rewards.

Here is a simple example:

| Action | Outcome | Reward |

|---|---|---|

| Move forward | Reach goal | +10 |

| Move left | Hit wall | -5 |

| Move right | Fall off | -10 |

The agent uses these experiences to improve its actions. It aims to get the highest reward over time.

Real-world Applications

RL is used in many areas today. Here are some examples:

- Robotics: Robots learn to walk or pick up objects.

- Games: AI learns to play and master games like chess.

- Finance: Algorithms learn to make trading decisions.

- Healthcare: Systems learn to suggest treatments.

RL helps machines become smarter and more efficient.

Key Principles Behind Reinforcement Learning

Reinforcement Learning (RL) is a branch of machine learning. It focuses on how agents take actions in an environment to maximize cumulative reward. The key principles behind RL help in understanding its core concepts. These principles include reward systems, exploration vs. exploitation, and more.

Reward Systems

In RL, the reward system is crucial. An agent gets a reward after each action. This reward tells the agent how good or bad the action was. The goal is to maximize the total reward over time.

| Action | Reward |

|---|---|

| Move Forward | +10 |

| Move Backward | -5 |

| Stay Still | 0 |

Positive rewards encourage actions. Negative rewards discourage actions. The agent learns which actions lead to higher rewards. This is essential for learning.

Exploration Vs. Exploitation

Exploration and exploitation are key concepts in RL. Exploration means trying new actions. Exploitation means using known actions to get rewards.

- Exploration: Helps the agent learn more about the environment.

- Exploitation: Helps the agent get the best rewards based on current knowledge.

There is a balance between exploration and exploitation. Too much exploration wastes time. Too much exploitation misses new opportunities. The agent must balance both to learn effectively.

In summary, understanding reward systems and balancing exploration vs. exploitation are essential in RL. These principles guide the agent in making decisions to maximize rewards.

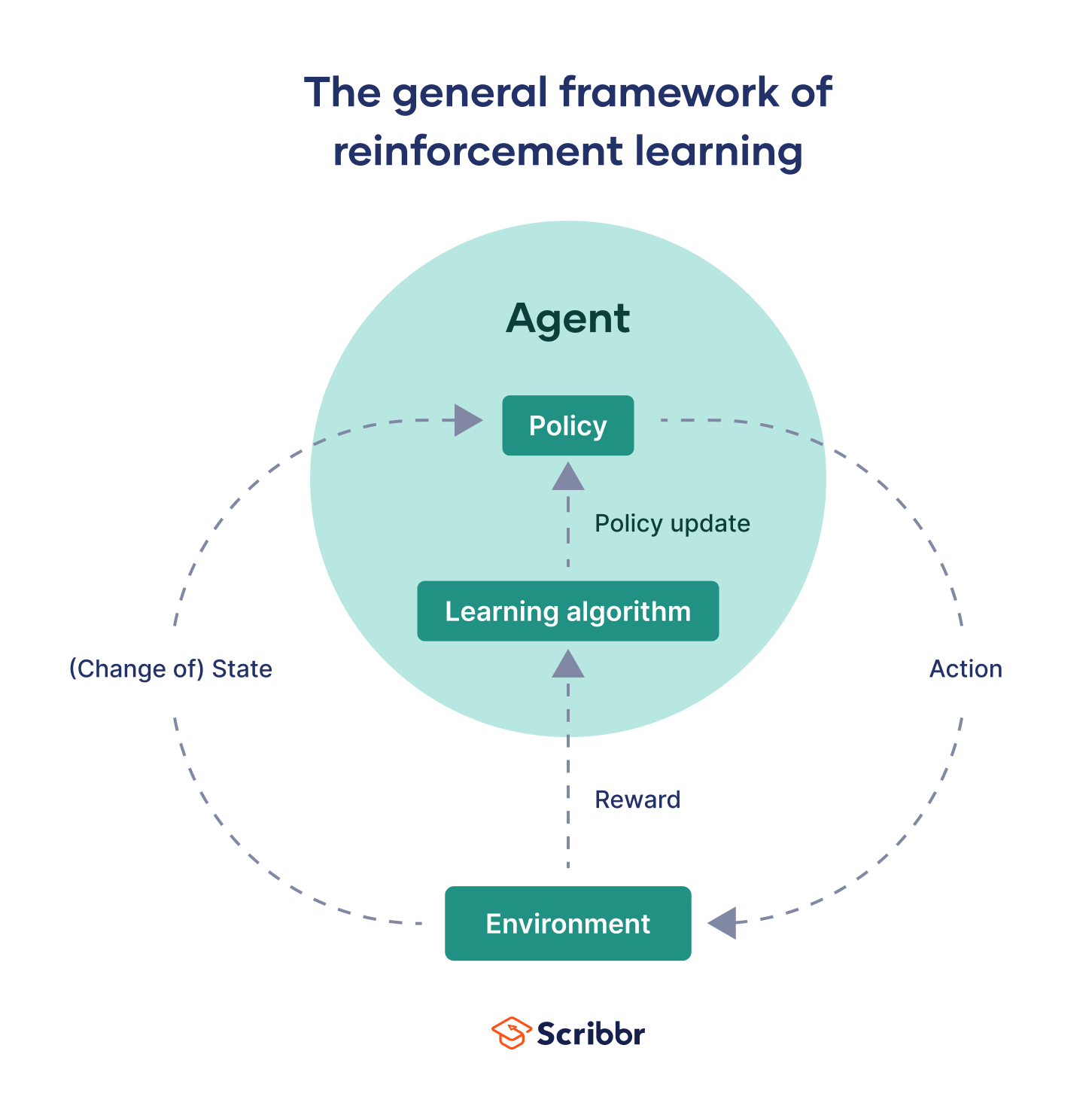

Essential Components Of Reinforcement Learning Models

Reinforcement learning (RL) models are powerful tools in artificial intelligence. They learn from interactions within their environment. These models consist of several key components. Understanding these components is crucial for leveraging RL effectively.

Understanding The Environment

The environment is where the agent operates. It includes everything the agent interacts with. The environment provides feedback to the agent. This feedback helps the agent learn and improve.

| Component | Description |

|---|---|

| State | The current situation or configuration. |

| Reward | Feedback from the environment. |

| Action | Choices the agent can make. |

The Role Of The Agent

The agent is the learner and decision-maker. It interacts with the environment. The agent’s goal is to maximize rewards over time. It uses the feedback to adjust its actions.

- The agent observes the state.

- It selects an action based on the state.

- The environment responds with the next state and reward.

Action Selection Mechanisms

Action selection mechanisms determine how the agent chooses actions. These mechanisms balance exploration and exploitation. Exploration means trying new actions. Exploitation means choosing actions that are known to yield high rewards.

- Exploration: Trying new actions.

- Exploitation: Choosing actions with known high rewards.

Common strategies include:

- Epsilon-Greedy: Chooses random actions with probability epsilon.

- Softmax: Uses probabilities to choose actions.

- Upper Confidence Bound (UCB): Balances exploration and exploitation.

Credit: www.scribbr.com

Popular Algorithms In Reinforcement Learning

Reinforcement Learning (RL) is a field of machine learning. It is about making agents learn to make decisions. Many algorithms make RL work. Let’s explore some popular ones.

Q-learning Explained

Q-Learning is a basic RL algorithm. It helps agents learn the best actions. The agent gets rewards for actions. It learns from these rewards.

- Agent starts with no knowledge.

- Agent takes random actions.

- Agent gets rewards or penalties.

- Agent updates its knowledge.

Q-Learning uses a table called Q-table. This table stores the value of actions. The agent updates this table. It uses a formula called the Q-value update rule.

Q(state, action) = Q(state, action) + alpha (reward + gamma max(Q(next_state, all_actions)) - Q(state, action))

Here is what the terms mean:

| Term | Meaning |

|---|---|

| alpha | Learning rate |

| gamma | Discount factor |

| reward | Immediate reward |

| max(Q(next_state, all_actions)) | Best future reward |

Deep Reinforcement Learning

Deep Reinforcement Learning (DRL) uses deep neural networks. It handles complex problems better than Q-Learning. It learns from raw data like images or text.

DRL combines RL and deep learning. It uses neural networks to predict Q-values. These networks replace the Q-table. They can handle large and complex environments.

Popular DRL algorithms are:

- Deep Q-Network (DQN)

- Policy Gradient Methods

- Actor-Critic Methods

DQN uses a neural network to predict Q-values. It updates the network using a target network. This makes learning stable.

Policy Gradient Methods learn policies directly. They use rewards to update policies. This method is useful for continuous actions.

Actor-Critic Methods combine the best of both. The actor chooses actions. The critic evaluates them. This method is efficient and stable.

Training Strategies For Effective Learning

Reinforcement Learning (RL) is a type of machine learning. It focuses on how agents should take actions in an environment to maximize rewards. Effective training strategies are crucial for RL to perform well. Below, we discuss some key strategies.

Reward Shaping Techniques

Reward shaping helps guide the agent towards desired behaviors. It modifies the reward signal to make learning faster and more effective.

There are various reward shaping techniques:

- Potential-based Reward Shaping: Adds a potential function to the reward.

- State-based Shaping: Provides rewards based on the agent’s state.

- Action-based Shaping: Gives rewards for taking specific actions.

Reward shaping can help in complex environments. It makes learning more efficient and less time-consuming.

Dealing With Sparse Rewards

Sparse rewards occur when rewards are infrequent or delayed. This makes it hard for the agent to learn.

Here are some techniques to deal with sparse rewards:

- Reward Hacking: Design rewards for small achievements.

- Hierarchical Learning: Break tasks into smaller subtasks with rewards.

- Exploration Strategies: Encourage the agent to explore more.

Using these methods can improve learning even with sparse rewards.

In summary, effective training strategies like reward shaping and handling sparse rewards are vital. They ensure the agent learns efficiently and performs optimally.

Credit: www.mathworks.com

Challenges In Reinforcement Learning

Reinforcement Learning (RL) has many challenges. These challenges make it hard to apply RL in real-world scenarios. Understanding these challenges helps improve RL algorithms and applications.

Curse Of Dimensionality

The curse of dimensionality is a big problem in RL. As the number of dimensions grows, the space becomes huge. This makes searching for the best actions very slow and difficult.

For example, imagine a robot navigating a room. If the room has many obstacles, the robot has many choices to make. Each choice adds a new dimension, making the problem harder.

| Dimensions | Computation Time |

|---|---|

| 2 | Fast |

| 10 | Medium |

| 100 | Very Slow |

Reducing dimensions or using better algorithms can help. Some techniques include Principal Component Analysis (PCA) and Feature Selection.

Stability And Convergence Issues

Stability and convergence are key issues in RL. An RL algorithm must learn a stable policy. This policy must also converge to the best solution over time.

Many factors affect stability and convergence:

- Learning rate

- Exploration vs. Exploitation balance

- Algorithm choice

If the learning rate is too high, the algorithm may never stabilize. If too low, learning takes too long. Balancing exploration and exploitation is also tricky. Too much exploration wastes time. Too little misses better solutions.

Popular algorithms like Q-Learning and Deep Q-Networks (DQN) address these issues. But, they still need fine-tuning for each problem.

Advancements In Reinforcement Learning

Reinforcement Learning (RL) is a part of machine learning. It helps machines learn from their actions. Recent advancements have made RL more powerful. These improvements enable machines to solve complex problems.

Transfer Learning In Rl

Transfer Learning is a big step in RL. It allows a model to use knowledge from one task in another. This saves time and resources.

For example, consider a robot that learns to walk. With Transfer Learning, the robot can use this knowledge to learn to run faster.

| Task | Knowledge Applied |

|---|---|

| Walking | Basic Movement |

| Running | Fast Movement |

Multi-agent Systems

Multi-agent Systems involve multiple agents working together. These agents can learn and make decisions. They share information to achieve a common goal.

For instance, consider a team of drones. Each drone can learn its role. Together, they can complete a search and rescue mission.

- Coordination among agents

- Shared learning

- Efficient problem-solving

Implementing Reinforcement Learning Projects

Implementing Reinforcement Learning (RL) projects can be exciting and challenging. It involves several critical steps to achieve success. Two vital steps are choosing the right environment and using suitable tools and libraries.

Choosing The Right Environment

Choosing the right environment is crucial for RL projects. An environment defines the task your agent will learn. Several environments are available for testing RL algorithms. Popular choices include OpenAI Gym and Unity ML-Agents.

- OpenAI Gym: A platform with diverse environments.

- Unity ML-Agents: A toolkit for creating custom environments.

Consider the complexity and goals of your project. Match the environment to your needs. Some environments offer simple tasks. Others provide complex and dynamic scenarios.

Tools And Libraries For Development

Several tools and libraries help develop RL projects. These tools simplify coding and testing RL algorithms. Here are some popular choices:

| Tool/Library | Description |

|---|---|

| TensorFlow | A library for building neural networks. |

| PyTorch | An easy-to-use deep learning library. |

| Stable Baselines | A collection of RL algorithms. |

These tools support various RL algorithms. They also provide pre-built models and utilities. Using them can save time and effort.

Below is an example code snippet using TensorFlow and OpenAI Gym:

import gym

import tensorflow as tf

# Create the environment

env = gym.make('CartPole-v1')

# Define the neural network model

model = tf.keras.Sequential([

tf.keras.layers.Dense(24, activation='relu'),

tf.keras.layers.Dense(24, activation='relu'),

tf.keras.layers.Dense(env.action_space.n, activation='linear')

])

# Compile the model

model.compile(optimizer='adam', loss='mse')

# Example of training loop (simplified)

for episode in range(1000):

state = env.reset()

done = False

while not done:

action = model.predict(state)

next_state, reward, done, _ = env.step(action)

# Update the model here

state = next_state

This example sets up a basic RL project. It uses TensorFlow for the neural network. OpenAI Gym provides the environment.

Future Of Reinforcement Learning

The future of Reinforcement Learning (RL) looks very promising. RL can solve many complex problems. It can learn from its own actions. This makes it a powerful tool for many applications.

Potential Impact On Various Industries

RL can change many industries. Below are some key sectors that RL can transform:

- Healthcare: RL can help in personalized treatment plans.

- Finance: RL can improve trading algorithms.

- Transportation: RL can optimize routes for delivery services.

- Manufacturing: RL can automate quality control processes.

Emerging Research Directions

Researchers are exploring new ways to improve RL. Some of the emerging areas include:

- Safe RL: Ensuring RL systems make safe decisions.

- Multi-Agent RL: Learning how multiple agents can work together.

- Transfer Learning: Applying knowledge from one task to another.

These research directions aim to make RL more robust and applicable. Future advancements in RL will bring about more innovative solutions.

Frequently Asked Questions

What Is The Difference Between Ml And Rl?

Machine Learning (ML) uses data to train models. Reinforcement Learning (RL) trains models through trial and error. ML focuses on prediction and classification. RL emphasizes decision-making and rewards.

Is An Example Of Reinforcement Learning?

Yes, an example of reinforcement learning is training an AI to play chess. The AI learns by receiving rewards or penalties based on its moves.

What Are The Three Main Types Of Reinforcement Learning?

The three main types of reinforcement learning are: 1. **Value-Based**: Focuses on maximizing value functions. 2. **Policy-Based**: Directly optimizes the policy function. 3. **Model-Based**: Uses a model to simulate the environment for planning.

What Are The Basics Of Reinforcement Learning?

Reinforcement learning involves training agents using rewards and punishments. Agents learn optimal actions through trial and error. Key elements include states, actions, rewards, and policies. Algorithms like Q-learning and Deep Q-Networks are common. The goal is to maximize cumulative reward.

Conclusion

Reinforcement learning offers immense potential for advancements in various fields. By mastering this technology, businesses can optimize processes and innovate efficiently. Embracing reinforcement learning can lead to smarter systems and improved decision-making. Stay updated with the latest trends to fully leverage its benefits in your industry.